Local Maximum

My wife worked on MRI machines for her PhD. One thing that surprised me in learning about medical research is how brutal it is. Moving things along involves an awful lot of hard work - really brutal hard work.

But it makes sense. Climbing the tech tree on the medical side of things is obviously beneficial. Quality of life. Child mortality. The stakes are high enough that people work hard on hard problems and don’t give up. The usual arguments around four-day weeks and office hours for ignoring messages start to seem weaker when the stakes are this high.

People work famously hard at the big AI labs too. Partly because there’s a race between them. But if you listen to interviews, they believe they’re climbing the tech tree in ways that could benefit humanity even more broadly than medicine. Demis Hassabis won a Nobel Prize for protein folding - and that’s just one application. You could see their concerns as a superset of the medical end of things.

The split

AI 2027 predicts a scenario where much more formidable AI comes online within the next couple of years. But the authors don’t claim to know exactly what improvement will get us there. It’s effectively just “line keep going” - because saying otherwise would have been wrong for decades now.

I’m sympathetic to this on a gut level.

But within the field - particularly at the machine learning end, and in some of the labs - there are extremely well-regarded people who don’t believe the current paradigm will take us from here to there.

Yann LeCun has been consistent: “There’s absolutely no way that autoregressive LLMs, the type that we know today, will reach human intelligence. It’s just not going to happen.” Many guests on Machine Learning Street Talk say similar things. Transformers won’t get to AGI (whatever that means). Scaling is saturating.

It seems paradoxical for the industry to be split between “foom” and “it’s so over.” These are not close positions.

Petrol stations in the mist

I’m confident nobody knows. ChatGPT came as a surprise even to OpenAI - they thought it was just a little demo project. It became the fastest-growing application of all time, reaching 100 million users in two months.

So there’s obviously a risk my intuitions are off about the trajectory.

Ilya Sutskever is known for making breakthroughs - AlexNet, sequence-to-sequence learning, the work that led to GPT. The fact that breakthroughs were needed meant there was something of a wall coming. Or at least that the flow of progress was blocked until someone found the next thing.

We’ve gone through phases. Reinforcement learning is an obvious recent example - a bit more afterburner along with scale, training data, inference-time compute. If you assume static conditions, we’re always bound to start stalling. You’re driving along and the fuel gauge is going down.

But then a petrol station emerges out of the mist. And before you know it, things are healthy again.

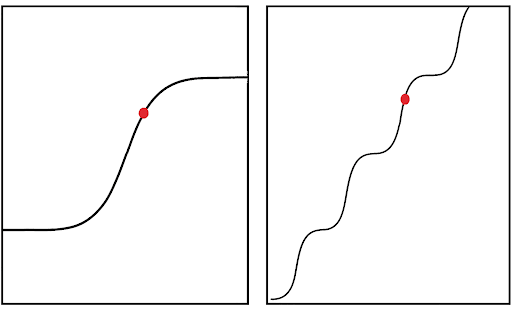

You can’t plan for the petrol stations. But they’ve been emerging at a consistent enough rate to keep things moving. Historically, overlapping sigmoids have formed something that looks like an exponential. Individual paradigms plateau, but progress continues.

I don’t know which camp is right. The “it’s so over” crowd includes serious people with deep technical knowledge. The “foom” crowd includes people who’ve been right about trajectory for years.

What I do know is that both positions require something unusual to be true. Either we’re about to hit a wall that invalidates decades of compounding progress, or we’re about to see capabilities that would have seemed like science fiction five years ago.

One of those is going to look obvious in hindsight. I just don’t know which one yet.