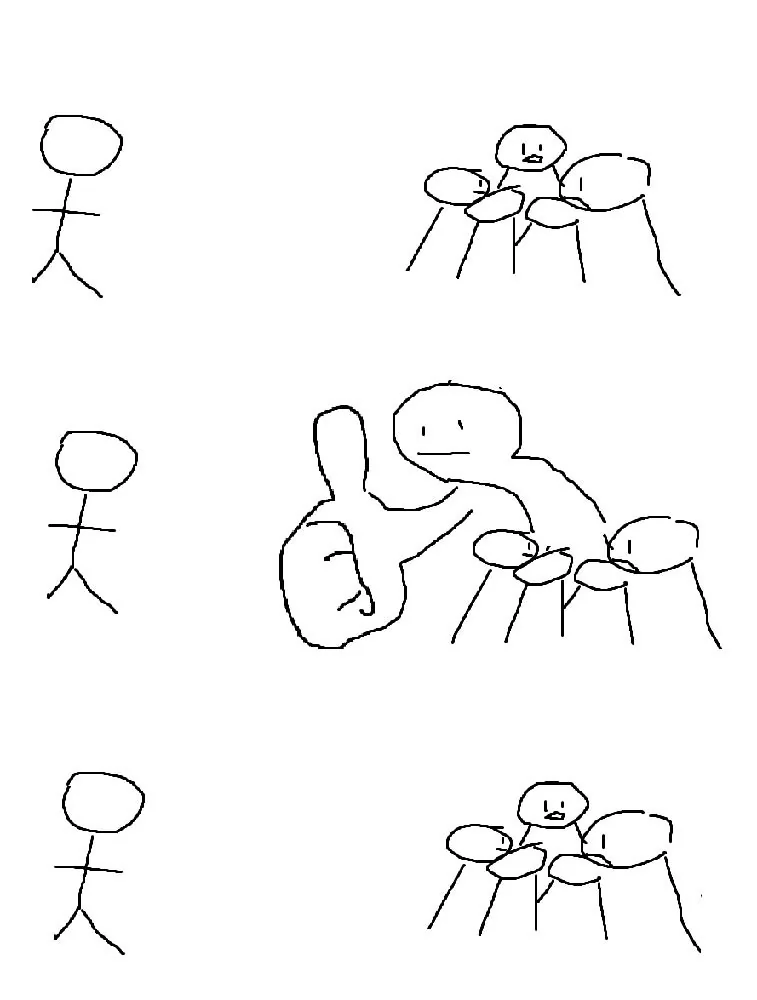

The Huddle

I’ve been using agents all day on a relatively complex project. I give the model a task, follow it closely, and spot what looks like an oversight. After months of catching subtle errors - hallucinated imports, slightly wrong method signatures, off-by-one mistakes - there’s a muscle memory. Here we go again.

So I chip in. And occasionally the model says, actually, no. Then carries on.

When I go to check whether this is the case, it turns out they were right. I was about to take us down a blind alley. They averted it by having read a little more than me, or scurrying off to check before accepting my position.

This literally never happened in the summer. We’re in a different place now.

The frequency

It’s still occasional. But the frequency is going up. It probably happens two or three times a day when I’m especially busy. Every time it does, I hop on the group chat and send the huddle meme. There’s an undertone of “thanks mate” when it happens. Or “ha, yeah, good point” - then the AI just carries on, like it assumes I must be joking.

Backing out

YOLO mode felt reckless not long ago. Now it’s mainstream. This might be part of why. If the human is getting in the way while the model is working, maybe the human ought to back out of the inner loop. A little.

Commercial pilots are often required to use autopilot during cruise. Hand-flying is the exception, not the rule. The automation isn’t optional - it’s preferred, because it’s more reliable at that level of the task. Human skill still matters for edge cases and overall judgement. But for the middle part, you’re expected to let the system work.

I suspect this will only increase. Sooner or later you might find employers preferring you to keep out of the inner loop. The evidence will accumulate. The direction is already visible.

You’re being trained to defer. And the training is working, because the model keeps being right.